Note : Unfortunately, the editor / code formatter plugin alters some XML tags. When copying from the examples of this post care should be taken to correct the affected tags (i.e. dependencyManagement, groupId, artifactId, activeByDefault, systemPropertyVariables, containerProfile, arquillian.launch).

Content repositories are complex and central components of an IT infrastructure / content management solution. Consequently, the attention upon stability and quality management are considerable. Alfresco development projects primarily use JUnit for their developer and integration tests. The Alfresco repository can be instantiated in-process within a JUnit test case of a business module by using the Alfresco-provided class ApplicationContextHelper or can be run in an embedded container (e.g. Jetty as part of the Maven build). The usual focus is on covering the individual business components in an isolated environment, differing from a realistic setup by usage of custom component wiring or extensive mocking. Even if the test coverage and success rate of test cases is close to a hundred percent, these kinds of tests only offer a limited amount of certainty when it comes to the quality of a project. A significant amount of bad surprises may be lurking beneath the surface and hit when the project is tested on real integration / quality environments.

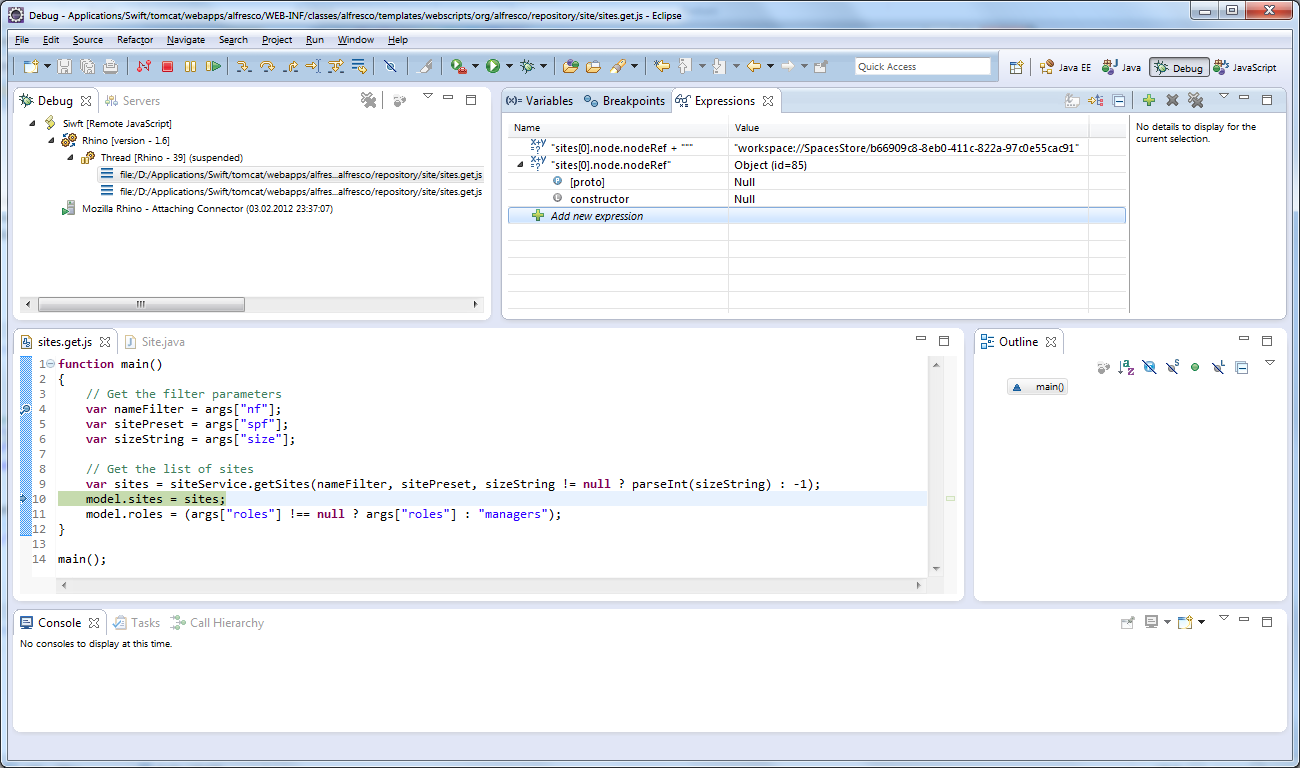

As part of our activities as an Alfresco partner, I have been spending some of my spare time outside of projects and sales support on getting development and integration tests working on Arquillian – a test framework based on JUnit and aiming to support real tests on real environments. I will try to document the various steps, problems, workarounds and solutions I have performed, encountered and come up with in a series of specific blog posts.

Problems with an “Embedded Tomcat” setup

In a lot of development projects, an embedded setup is the simplest and most efficient configuration when it comes to both the turnaround in getting new developers set up and reducing the dependency on local environments. My colleagues and I have tried to setup an embedded Tomcat with Arquillian, but ultimately failed to resolve the various classloading issues that came along with executing Tomcat in the same process. Specifically the XML APIs that are provided both by Tomcat and the JDK, and are referenced by Alfresco, created various incompatibilities between the Arquillian / Maven / boot classloader and the web application classloader.

Preparation of Tomcat instance / global Alfresco configuration

Running a “managed Tomcat” setup requires the preparation of a local Tomcat instance, which Arquillian will use to deploy the Alfresco Repository and execute tests on. Depending on the Alfresco version to be tested, this will either be a Tomcat 6 or Tomcat 7 installation. It is advisable to use a dedicated Tomcat instance for Arquillian instead of the instance you may have installed via the Alfresco installer.

For Arquillian to deploy Alfresco after the start of Tomcat it is necessary to provide the “manager” web application and configure the tomcat-users.xml for necessary access privileges. All other web applications being bundled with Tomcat (ROOT / host-manager) may be safely removed. A simple configuration of tomcat-users.xml might look like this:

< ?xml version='1.0' encoding='utf-8'?> <tomcat -users> <role rolename="manager"/> <user username="arquillian" password="arquillian" roles="manager"/> </tomcat> |

Providing a working alfresco-global.properties as basic configuration along with the necessary JDBC drivers in <tomcat>/shared/classes helps to keep test cases free from environment-specific configuration as best as possible. Setting up the connection to the database, directy for content storage and other supporting components is of primary concern, but subsystems may also be (pre-)configured to optimize the test environment. It is likely that 80% of test cases will be served by the following default configuration:

- Subsystem “fileServers”: deactivation of CIFS, NFS and FTP (not testable via JUnit)

- Subsystem “email”: deactivation of the IMAP / SMTP servers (not testable via JUnit)

If the subsystem configuration is done the proper way, i.e. placing configuration files into <tomcat>/shared/classes/alfresco/extension/subsystems/<Subsystem>/…, individual test cases may override the default configuration by using the Java classloader lookup mechanism. Under no circumstances should subsystem configuratio be placed in alfresco-global.properties (always considered “bad practice”).

Project setup

Includiung Arquillian in a specific development project can require quite specific adaptions. A simple Maven Java-project will server as the base of further demonstration / code snippets. Integrating the example witht the Maven SDK is an exercise left to the reader.

A simple project can be created by running mvn archetype:generate -DgroupId={project-packaging} -DartifactId={project-name} -DarchetypeArtifactId=maven-archetype-quickstart -DinteractiveMode=false or using the equivalent wizard in an IDE. Arquillian is included in the Maven lifecycle by adding the following artifact repository and dependencies to the project POM:

<repositories> <repository> <id>jboss-public-repository</id> <name>JBoss Public Repository</name> <url>https://repository.jboss.org/nexus/content/groups/public</url> </repository> </repositories> <dependencymanagement> <dependencies> <dependency> <groupid>org.jboss.arquillian</groupid> <artifactid>arquillian-bom</artifactid> <version>1.0.4.Final</version> <scope>import</scope> <type>pom</type> </dependency> </dependencies> </dependencymanagement> <dependencies> <dependency> <groupid>junit</groupid> <artifactid>junit</artifactid> <version>4.8.1</version> <scope>test</scope> </dependency> <dependency> <groupid>org.jboss.arquillian.junit</groupid> <artifactid>arquillian-junit-container</artifactid> <scope>test</scope> </dependency> <dependency> <groupid>org.jboss.arquillian.extension</groupid> <artifactid>arquillian-service-integration-spring-inject</artifactid> <version>1.0.0.Beta1</version> <scope>test</scope> </dependency> <dependency> <groupid>org.jboss.arquillian.extension</groupid> <artifactid>arquillian-service-deployer-spring-3</artifactid> <version>1.0.0.Beta1</version> <scope>test</scope> </dependency> <dependency> <groupid>org.jboss.shrinkwrap.resolver</groupid> <artifactid>shrinkwrap-resolver-impl-maven</artifactid> <scope>test</scope> </dependency> </dependencies> |

These dependencies provide the basic framework for the dynamic creation of Alfresco deployments via the ShrinkWrap API as well as access to Spring beans of the Alfresco for embedded JUnit tests. If the intended use of Arquillian is limited to running remote tests, the Spring-related dependencies may be dropped.

Connecting Arquillian with a Tomcat instance requires the setup of a container adapter, which is usually done in a specific, selectable profile:

<profiles> <profile> <id>tomcat-managed</id> <activation> <activebydefault>true</activebydefault> </activation> <dependencymanagement> <dependencies> <!-- Lock the version, since additional dependencies (i.e. from Alfresco) often clash --> <dependency> <groupid>commons-codec</groupid> <artifactid>commons-codec</artifactid> <version>1.5</version> </dependency> </dependencies> </dependencymanagement> <dependencies> <dependency> <groupid>org.jboss.arquillian.container</groupid> <artifactid>arquillian-tomcat-managed-6</artifactid> <version>1.0.0.CR4</version> <scope>test</scope> </dependency> </dependencies> </profile> </profiles> |

Running tests of Alfresco 4.2 in a Tomcat 7 requires the corresponding dependency arquillian-tomcat-managed-7.

The second part of connecting Arquillian with the Tomcat instance is the configuration of the container in arquillian.xml on the classpath of the project. This file can be placed in src/test/resources to provide a global configuration or within a profile-specific path to allow for configuration individual for each developer. A profile-specific location of the file is usually not necessary since since the individual configuration segments within the file can be selected via the Surefire plugin configuration. A basic example of arquillian.xml might look like this:

< ?xml version="1.0" encoding="UTF-8"?> <arquillian xmlns="http://jboss.org/schema/arquillian" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://jboss.org/schema/arquillian http://jboss.org/schema/arquillian/arquillian_1_0.xsd" xmlns:spring="urn:arq:org.jboss.arquillian.container.spring.embedded_3"> <container qualifier="tomcat-managed-6" default="true"> <configuration> <!-- Must match HTTP port from Tomcat server configuration file --> <property name="bindHttpPort">8680</property> <property name="bindAddress">localhost</property> <!-- The prepared Tomcat instance --> <property name="catalinaHome">D:/Applications/Arquillian/tomcat-repo</property> <property name="javaHome">C:/Program Files/Java/jdk1.6.0_30</property> <!-- Allow generous Heap and PermGen since we may deploy + start Alfresco multiple times --> <property name="javaVmArguments">-Xmx2G -Xms2G -XX:MaxPermSize=1G -Dnet.sf.ehcache.skipUpdateCheck=true -Dorg.terracotta.quartz.skipUpdateCheck=true</property> <!-- Must match configured manager from tomcat-users.xml --> <property name="user">arquillian</property> <property name="pass">arquillian</property> <property name="urlCharset">UTF-8</property> <property name="startupTimeoutInSeconds">120</property> <!-- Local copy of Tomcat server configuration file --> <property name="serverConfig">server.xml</property> </configuration> </container> <extension qualifier="spring"> <!-- Deactive automatic inclusion of Spring artifacts in deployments as Alfresco already contains them --> <property name="auto-package">false</property> </extension> </arquillian> |

The relevant server.xml can be copied to the project classpath from the Tomcat instance configured for Arquillian (src/test/resources). Unfortunately it does not seem possible to simply refer to it within the Tomcat instance by using a file path.

The value of the qualifier attribute of the container element can be used to select the container in a specific profile of the project POM:

<build> <plugins> <plugin> <groupid>org.apache.maven.plugins</groupid> <artifactid>maven-surefire-plugin</artifactid> <configuration> <systempropertyvariables> <arquillian .launch>${containerProfile}</arquillian .launch> </systempropertyvariables> </configuration> </plugin> </plugins> </build> <profiles> <profile> <id>Dev XY</id> <properties> <containerprofile>xy-tomcat-managed-6</containerprofile> </properties> </profile> </profiles> |

If the JUnit-integration of the IDE is used, Arquillian will also look up the arquillian.xml in the classpath, but the container profiles need to be selected with the -Darquillian.launch system property.

A simple REST API test

The simplest test cases that can be run with Arquillian without much further configuration / adaption are tests of the Alfresco REST API. The following test case runs with the configuration of all previous snippets (only adding a dependency on org.jboss.resteasy:resteasy-jaxrs and org.json:json):

@RunWith(Arquillian.class) public class SimpleLoginRemoteTest { @ArquillianResource // HTTP base-URl specific for our test deployment private URL baseURL; @Deployment // build the Repository WAR we want to test public static WebArchive createDeployment() throws Exception { // initialize Maven resolver from our project POM (specifically: repository-configuration to retrieve artifacts) final MavenDependencyResolver resolver = DependencyResolvers.use(MavenDependencyResolver.class).loadMetadataFromPom("pom.xml"); // we want a standard Alfresco WAR for our tests - no modifications final File[] files = resolver.artifact("org.alfresco.enterprise:alfresco:war:4.1.4").exclusion("*:*").resolveAsFiles(); // there is a simpler "createFromZipFile" method, but we want to provide a custom webapp-name to avoid deployment conflicts // files[0] is the resolved alfresco.war final WebArchive webArchive = ShrinkWrap.create(WebArchive.class, "SimpleLoginRemoteTest.war").as(ZipImporter.class).importFrom(files[0]).as(WebArchive.class); return webArchive; } @RunAsClient @Test public void testAdminRESTLogin() throws Exception { // use JBoss resteasy-library + org.json (add to POM) to perform a login final ClientRequest loginRequest = new ClientRequest(this.baseURL.toURI() + "s/api/login"); loginRequest.accept("application/json"); final JSONObject loginReqObj = new JSONObject(); loginReqObj.put("username", "admin").put("password", "admin"); loginRequest.body("application/json", loginReqObj.toString()); final ClientResponse< String> loginResponse = loginRequest.post(String.class); Assert.assertEquals("Login failed", 200, loginResponse.getStatus()); } } |

Some elaboration on the example:

- Arquillian specific test case classes need to be executed with the corresponding Arquillian runner, which controls the lifecycle a bit differently than the JUnit standard.

- Each test case comes with a static method with a @Deployment annotation, which builds the artifact to test using ShrinkWrap. This allows for a specific combination of the components under scrutiny and the inclusion of custom configuration.

- Test methods with the @RunAsClient annotation are run by Arquillian within the JUnit process / context and don’t have access to beans of the Alfresco Repository. If this annotation is missing, Arquillian will execute the test method within the Alfresco Repository web application via the ArquillianServletRunner servlet automatically added to the WebArchive.

- Test methods with @RunAsClient that need to acces the Alfresco Repository remotely can use an URL instance field annotated with @ArquillianResource to get the HTTP base URL for the context of the web application.

- When the MavenDependencyResolver is used to build the deployment based on the project POM, the necessary artifact repositories for Alfresco need to be added to the POM(i.e. http://artifacts.alfresco.com/…).

When running the tests from an IDE or via mvn test Arquillian will start the Tomcat instance, build the test artifacts via the callback methods and transfer / deploy them in Tomcat via the manager web application. As long as the Tomcat instance has been properly set up a green bar or the BUILD SUCCESS message should be the result.

A simple (service ) bean test

In order to have Arquillian tests cover (service) beans, a test class needs to have direct access to all beans defined in the Spring application context of the Alfresco Repository. In contrast to the regular Alfresco JUnit tests (e.g. NodeServiceTest) Arquillian tests cannot make use of the ApplicationContextHelper as this would start a new application context within the already running web application. Since the various test methods (with @RunAsClient and without) are mixed in one class which will be executed in both the JUnit process as well as the managed Alfresco Repository, it is also not possible to simply use @Before/@BeforeClass methods an retrieve the Spring application context manually using ContextLoader.getCurrentWebApplicationContext(). A simple customization during the creation of the deployment enable the use of @Autowired and @Qualifier annotations to have Arquillian inject the necessary beans automatically via the ArquillianServletRunner.

@RunWith(Arquillian.class) @SpringWebConfiguration public class SimpleNodeServiceLocalTest { @Deployment public static WebArchive createDeployment() { final MavenDependencyResolver resolver = DependencyResolvers.use(MavenDependencyResolver.class).loadMetadataFromPom("pom.xml"); final File[] files = resolver.artifact("org.alfresco.enterprise:alfresco:war:4.1.4").exclusion("*:*").resolveAsFiles(); final WebArchive webArchive = ShrinkWrap.create(WebArchive.class, "SimpleNodeServiceLocalTest.war").as(ZipImporter.class).importFrom(files[0]).as(WebArchive.class); webArchive.addAsResource("arquillian-alfresco-context.xml", "alfresco/extension/arquillian-alfresco-context.xml"); return webArchive; } @Autowired @Qualifier("NodeService") protected NodeService nodeService; @Autowired @Qualifier("TransactionService") protected TransactionService transactionService; @Test public void testNodeService() throws Exception { Assert.assertNotNull("NodeService not injected", this.nodeService); Assert.assertNotNull("TransactionService not injected", this.transactionService); AuthenticationUtil.setFullyAuthenticatedUser("admin"); try { this.transactionService.getRetryingTransactionHelper().doInTransaction(new RetryingTransactionCallback< Void>() { public Void execute() throws Throwable { final List< StoreRef> stores = SimpleNodeServiceLocalTest.this.nodeService.getStores(); Assert.assertFalse("List of stores is empty", stores.isEmpty()); // check default / standard stores Assert.assertTrue("Store workspace://SpacesStore not contained in list of stores", stores.contains(StoreRef.STORE_REF_WORKSPACE_SPACESSTORE)); Assert.assertTrue("Store archive://SpacesStore not contained in list of stores", stores.contains(StoreRef.STORE_REF_ARCHIVE_SPACESSTORE)); return null; } }, true); } finally { AuthenticationUtil.clearCurrentSecurityContext(); } } } |

The corresponding arquillian-alfresco-context.xml looks like this:

< ?xml version='1.0' encoding='UTF-8'?> <beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context" xsi:schemaLocation=" http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.0.xsd"> <context:annotation -config /> </beans> |

Some elaboration on the example:

- The @SpringWebConfiguration annotation declares that beans from Spring context of the tested web application should be injected when running the test. In case servlets define specific contexts, it is possible to target these via an optional parameter.

- Using @Autowired and @Qualifier injects specific beans via the Spring auto-wiring capability into the test class when it is executed in the container. Auto-wiring is not enabled by default in Alfresco and needs to be enabled by adding a custom XML context configuration file to the WAR. This file does not alter anything substantial in the Spring configuration of Alfresco but implicitly activates the auto-wiring capability.

- Test methods run without any authentication or transactional context. If a test requires any of these it is the reposnibility of the test to properly initiate the context. When public service beans (e.g. “NodeService”) are tested it may be possible to leave out the initialization of the transaction context as if the test covers an atomic operation since public beans (correctly configured) automatically take care of transaction handling.

- The project POM depends on the Alfresco Repository JAR of the corresponding Alfresco version to compile the test case.

Open issues / pain points

The provided examples allow universal testing of Alfresco in a “managed Tomcat” scenario. As is the case with all testing approaches, there are (still) some issues / problems limiting its usability / effectiveness. The following are the most critical issues / pain points from my point of view:

- Duration of deployment – Each deployment of a test-case-specific Alfresco Repository WAR takes an enormous amount of time for the transfer, bootstrapping and shutdown of the various application components. Running the tests on my Lenovo T520 with a current SSD and 16 GiB RAM it takes about three minutes for only two test cases (complexity slightly higher than the examples). This might be bearable for individual component tests and even provide a welcome coffee break, but larger and frequent integration tests in a continuous integration context could be problematic.

- Memory usage – Multiple deployments into Tomcat require a huge amount of memory especially for the permanent generation. Depending on the available equipment of the development environment this requirement might not be possible to be accomodated. Only single or few test cases may be executed in a single run in this instance.

- Deployment errors – Some of the test execution runs in my environment resulted in errors during the (un)deployment of the web application which could only be resolved through manual intervention. This needs to be resolved for a continuous integration use case.

- Minor inconsistencies across Tomcat versions – During a test of Alfresco 4.2 on Tomcat 7 Arquillian did not automatically inject the ArquillianServlertRunner servlet into the web.xml. This lead to service beans not being testable. A work-a-round for this problem is to manually include an adapted web.xml with explicit configuration of the AruillianServletRunner servlet during construction of the WebArchive.

- Provisioning of a consistent test data set – Individual tests may require a very specific state of the content repository data (database, file content, index). Management of the data is handled externally in a “managed Tomcat” scenario outside of the test case logic and may not be influenced without environment-specific code.

Future blog posts are meant to address some of these issues and provide / document possible solutions.

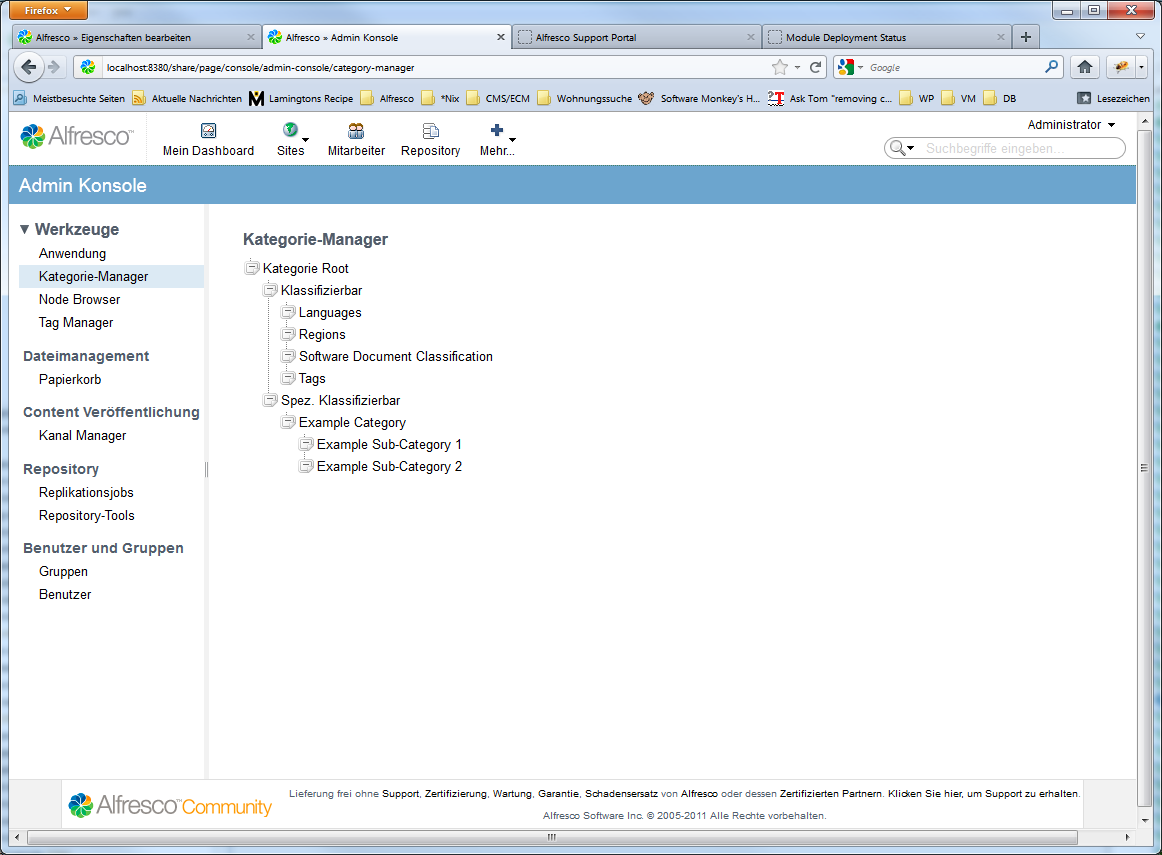

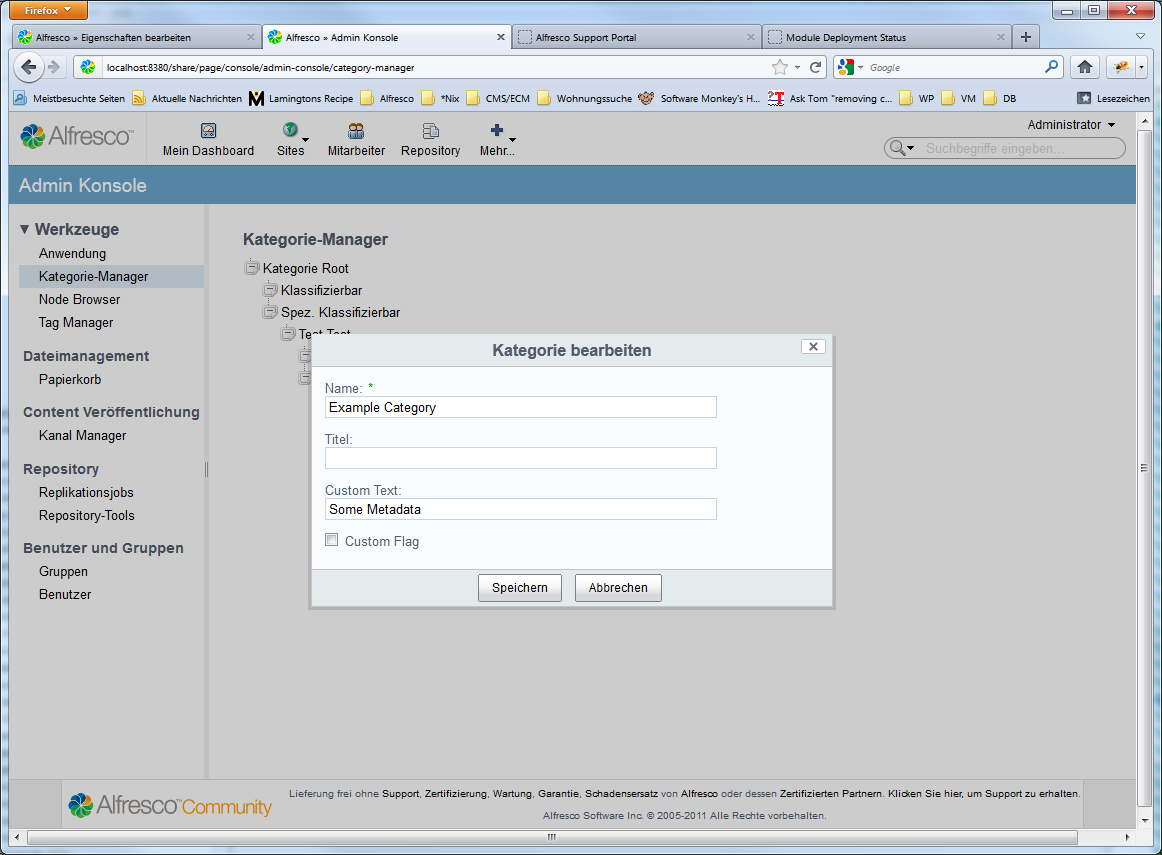

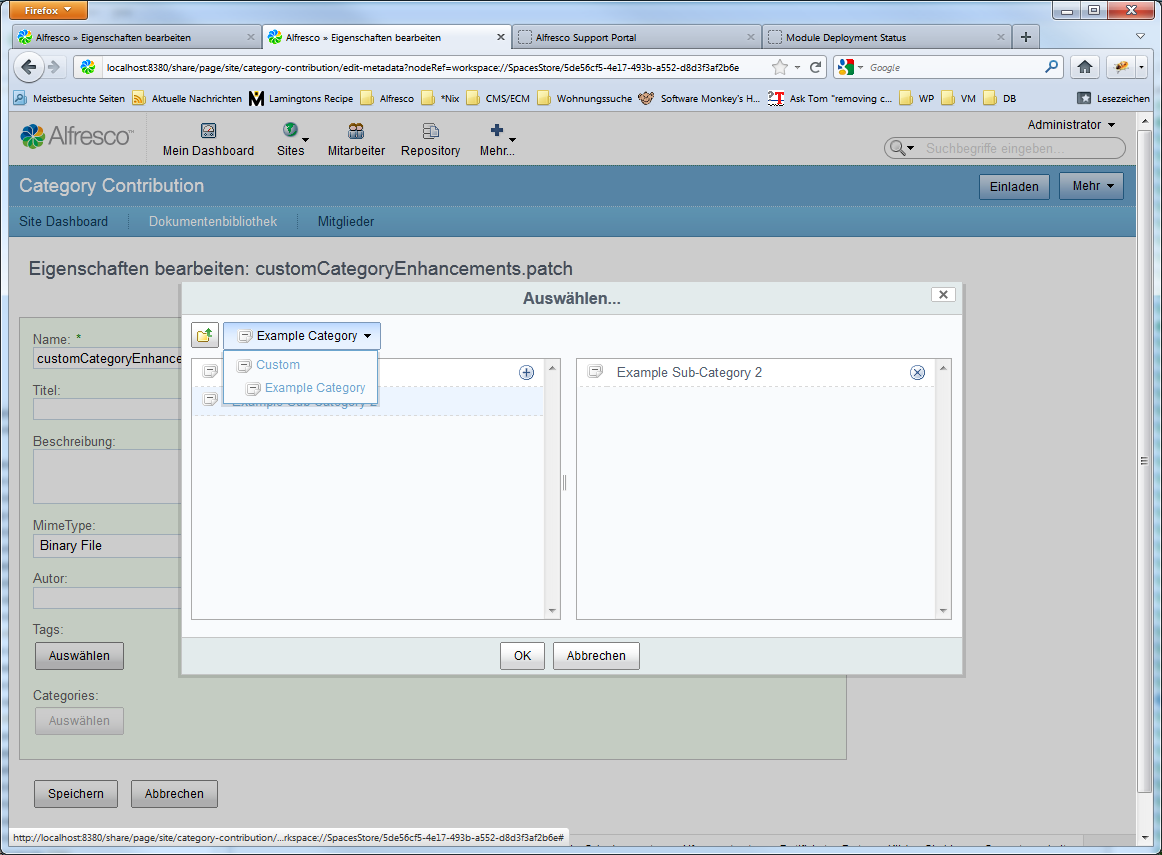

German

German